Relative Risk vs Absolute Risk Reduction

I have written often and spoken repeatedly to so many of my patients about relative versus absolute risk. This is so widespread in the medical literature, scientific literature and even in all advertising. I am always waiting for that “aha moment” when you understand how relative risk management is chicanery. It is used to sell you product. To amplify, inflate or conflate results.

I am prompted to write this blog by a recent meta-analysis published in JAMA Neurology May 13, 2019 with the title Frequency of Intracranial Hemorrhage With Low-Dose Aspirin in Individuals Without Symptomatic Cardiovascular Disease A Systematic Review and Meta-analysis. Sounds ominous, doesn’t it? Low-dose aspirin, increasing the risk of brain hemorrhage? Especially to all of you are using low-dose aspirin (81 mg) as a preventive measure.

In June 2015, I had previously published on my own analysis of all the major statin studies showing negligible benefit. So let’s expand upon this discussion. Let me bombard you with numerous examples.

Analyzing Statin Studies and Efficacy

My favorite reference is Calculated Risks by Gerd Gigerenzer. This is such a powerful book. Simply stated and elegantly presented examples of the misuse of risk analysis, and other statistical games. Let me quote briefly from his analysis on use of Pravastatin — a commonly used statin for “prevention of cardiovascular disease.”

“Absolute risk reduction: the absolute risk reduction is the proportion of patients who die without treatment (placebo) minus those who die with treatment. Pravastatin reduces the number of people who died from 41 to 32 in 1000. That is the absolute risk reduction is 9 and 1000, which is 0.9%.

Relative risk reduction: the relative risk reduction is the absolute risk reduction divided by the proportion of patients who die without treatment. For the present data, the relative risk reduction is 9÷41, which is 22%. Thus, Pravastatin reduces the risk of dying by 22%

The relative risk reduction looks more impressive than absolute risk reduction. Relative risks are larger numbers than absolute risk and therefore suggest higher benefits than really exist. Absolute risks are a mind tool that makes the actual benefits more understandable. – Gerd Gigerenzer

This really drives the point home. Exactly as I had previously written.

Low Dose Aspirin and Intra-Cranial Hemorrhage

Now let’s look at the recent JAMA Neurology 2019 article.

I will quote the results showing relative risk ratios (RR). Hazard ratio (HR) is nearly the same measure often quoted. A RR or HR value of 1.5 = 50% increased risk. Either of these are the most commonly cited statistics in all the medical studies.

Here is an abstract of the results of the study. And so frequently that is all you will see and believe. Because most people, including even most physicians, will not read or analyze the paper. We are too busy.

RESULTS: The search identified 13 randomized clinical trials of low-dose aspirin use for primary prevention, enrolling 134,446 patients.

Pooling the results from the random-effects model showed that low-dose aspirin, compared with control, was associated with an increased risk of any intracranial bleeding (8 trials; relative risk, 1.37; 95%CI, 1.13-1.66; 2 additional intracranial hemorrhages in 1000 people), with potentially the greatest relative risk increase for subdural or extradural hemorrhage (4 trials; relative risk, 1.53; 95%CI, 1.08-2.18) and less for intracerebral hemorrhage and subarachnoid hemorrhage.

CONCLUSIONS AND RELEVANCE Among people without symptomatic cardiovascular disease, use of low-dose aspirin was associated with an overall increased risk of intracranial hemorrhage, and heightened risk of intracerebral hemorrhage for those of Asian race/ethnicity or people with a low body mass index. — Meng Lee et al

The key phrase here is overall increased risk.

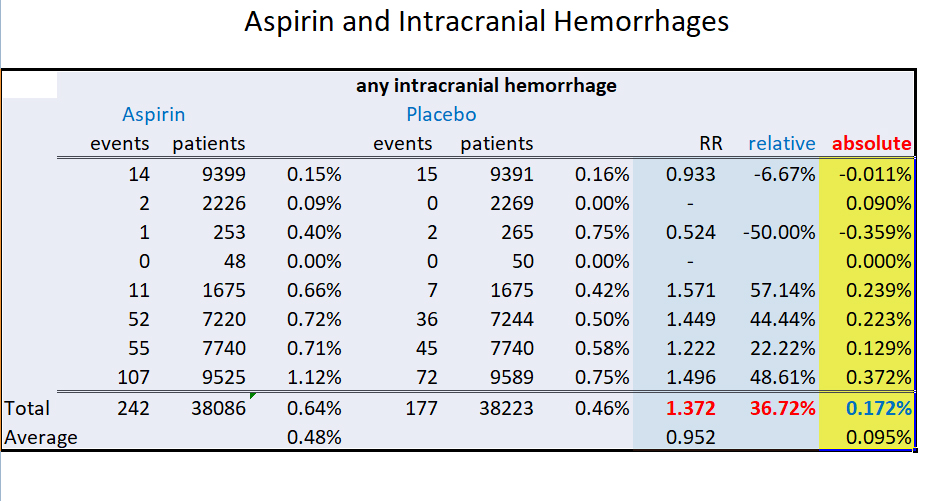

So let me excerpt just one portion of data from the study to show you why the conclusion is inaccurate and essentially bogus. The true incidence was tiny. So relative comparisons are nearly meaningless.

Pay attention to the extreme right hand column, highlighted in yellow, which shows my added absolute risk. This column is absent from all medical papers.

Relative vs Absolute Risk Graphic

Relative vs Absolute Risk Graphic

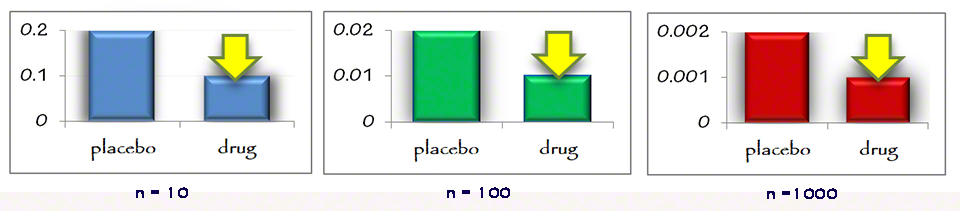

Now let me illustrate even more graphically. It is all dependent on the sample size and true incidence. What is the difference between relative and absolute risk or statistics with a sample size of 10, 100, or 1,000? It is the entire story. This first graphic shows a relative risk reduction of 50% across 3 sample sizes. The large yellow arrow shows an equal risk reduction across these sample sizes.

This is how they fool you. The relative risk reduction is independent of the sample size.

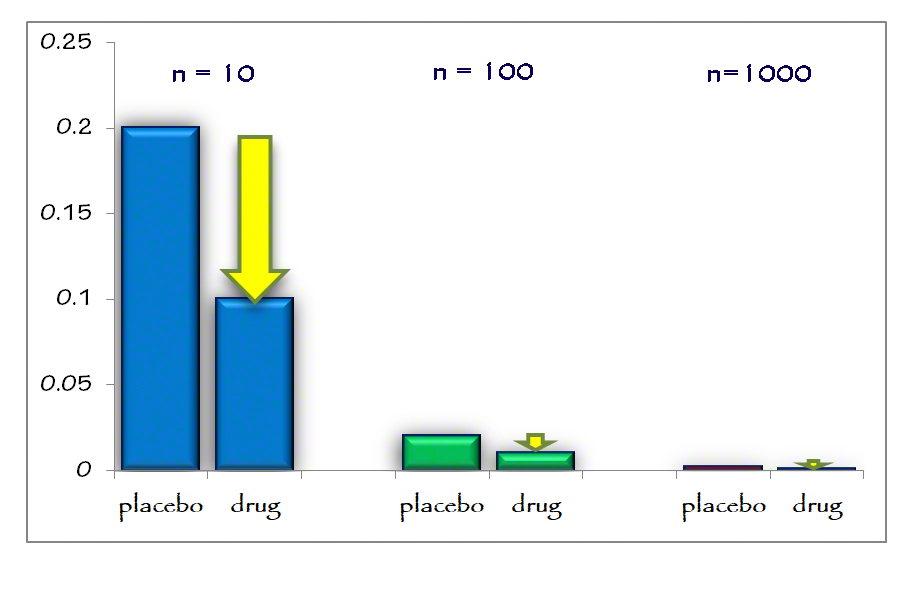

Now let’s look at the absolute risk reduction across these 3 sample sizes.

It should become much clearer. The absolute risk reduction (large yellow arrow) becomes almost invisible as the sample size increases. That is the take-home message today. Absolute risk reduction is entirely dependent on the sample size and incidence. It is also, incidentally, what is necessary to determine the “numbers needed to treat (NNT).” How large a sample size is necessary in order to arrive at meaningful results? To find at least one event.

Poor Medical Reporting

I had previously quoted work by the highly respected Douglas Altman, D.Sc., in a short editorial in JAMA 2002. Where he writes:

there is considerable evidence that many published reports of randomized controlled trials (RCTs) are poor or even wrong. Despite their clear importance. The results of several reviews of published trials briefly summarized in table 1. Poor methodology and reporting are widespread. — Douglas Altman, D.Sc.

He was such a tireless advocate of improving medical reporting, improving peer review and exposing the inadequacy of most published papers.

Two months months later in the Aug 2002 Scientific American, Benjamin Stix quoted this erudite statistician with his own comments on poor medical reporting. Essentially lambasting (or exhorting) the entire medical literature community

MEDICAL REPORTING

Only the BestAdvertising campaigns routinely hype the most flattering claims to sell their products. Evidently so do papers in medical journals. Researchers at the University of California at Davis School of Medicine found too much emphasis given to favorable statistics in five of the top medical journals: the New England Journal of Medicine, the Journal of the American Medical Association, the Lancet, the Annals of Internal Medicine and the BMJ. The study, which looked at 359 papers on randomized trials, found that most researchers furnish a statistic only for “relative risk reduction”–the percentage difference between the effect of the treatment and a placebo. Just 18 included the more straightforward absolute risk reduction. If a treatment reduced the absolute risk from, say, 4 to 1 percent, it appears more impressive to present only the relative reduction of 75 percent. Researchers also failed to show other statistics that provide a more nuanced picture of the results of clinical trials. The article is in the June 5 Journal of the American Medical Association.(full pdf) —Benjamin Stix [emphasis added for clarity – ed.]

Coda

Those of you who are followers of Dr. Peter Attia have also been treated to this same discussion of risk management, risk analysis, and poor statistical methodology.

So next time you read a headline — drug reduces risk 43%, a vitamin increases risk 14%, this car now accelerates 30% faster, or whatever, you will have a better appreciation of risk and performance.

“We won’t get fooled again” — The Who

Philip Lee Miller, MD

Carmel CA 93923

2 thoughts on “Relative Risk vs Absolute Risk Reduction”

Thank you so kindly Nancy. This is really important information. That is why I continue to stress this repeatedly.

An elegant argument and critical information. This is exactly what a patient needs to hear from his doctor.